Cloud-native solutions often start off with high promises because their design is flexible, they can grow as needed, and they can be set up quickly in different areas. But things are going wrong below: untracked APIs leak private information, credentials sneak into code, and threats get past transient workloads even when there are powerful protections in place. Security guards are dealing with problems that they see all the time: “Do we need more firewalls?” More rigorous entry points? These steps help, but they only go so far. You can’t see the root reasons of the cloud and API interactions issue unless you look at them from a different angle. This paper examines cloud and API security from a novel perspective, characterizing it as a systemic issue and emphasizing advanced patterns—subtle, interconnected vulnerabilities that conventional checks often overlook. We’ll talk about how finding these patterns might turn reactive firefighting into accurate, proactive defense of cloud-native systems based on real occurrences and hands-on experience.

The New Attack Surface: A Closer Look at Four Dımensıons

We need to rethink the basics to keep the cloud safe because things are more complicated now. We split the problem down into four categories that are easy to understand, but we focus on the flaws that aren’t obvious in each one:

Identity and Access: Identity has become the fundamental way to regulate things, even outside of networks. Cloud IAM rules and machine-to-machine credentials now secure crucial pathways. There are a few little issues here, such IAM drift (roles obtaining greater power over time) and service identities that aren’t apparent.

APIs and Services: Microservices and integrations make shadow APIs that are hard to keep track of in addition to documented endpoints. It’s like leaving a door open if an API isn’t listed or restricted. Unchecked service mesh trust and endpoints that aren’t permitted are two problems that make the surface area greater.

Workloads and Environments: In multi-clouds, ephemeral containers and serverless functions come and go swiftly, in addition to static servers. This modification raises new concerns, such as short-lived instances that contain long-lived secrets or new clusters with the improper default settings that open ports. Ephemeral computing is powerful, yet any mistake can quickly be duplicated on a massive scale.

Policy and Visibility: To stay on top of things, we need more than just alarms that go off when something goes wrong. We also need to enforce policies all the time and find problems before they happen. Traditional scans only show you snapshots. In cloud periods, you need dynamic guardrails. Policy-as-code and adaptive monitoring are two examples of patterns that link symptoms (like unusual access spikes and token misuse) to their root causes (like IAM misconfigurations and trust gaps). These patterns complete the cycle from detection to correction.

There are well-known best practices for each of the classic areas, like identity, API, workload, and visibility. But these places are connected by complicated failure patterns. A small adjustment to a privilege (Identity) might let you access a hidden endpoint (API), and a new container (Workload) might not have to go through a policy check (Visibility). The next portions will show you how to detect these hidden hazards and deal with them.

Advanced Patterns ın Actıon: How to Fınd Hıdden Rısks

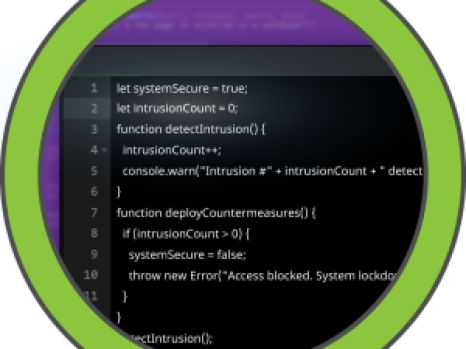

In a cloud-native system, the obvious problem is often just a symptom of a bigger problem. Finding the underlying pattern and fixing it at its source is the best way to discover out what’s really going on in events:

- Finding Exposures That Are Hidden

Rate Limit Evasion (APIs): What if your public API doesn’t seem to be getting utilized very much, yet a lot of data is being scraped? One clue could be that traffic is getting through an endpoint that you didn’t know about and isn’t limited by your rate constraints. In real life, attackers make calls that aren’t part of your documented API. A team that meant well set up a shadow API or debug interface without the API gateway’s awareness. Action: Either take control of this endpoint straight away or turn it off. In the long run, execute discovery scans for shadow APIs (for example, check load balancer logs and code repos for undocumented endpoints) to find any holes in your API surface.

Identity Ambiguity (Identity): The logs show that someone with admin-level access to data initiated something, but you can’t tell which account or service it was. The symptom is a worrying absence of attribution: a critical activity that doesn’t have a clear name. The key cause is that the credentials are the same or shared. It’s possible that more than one microservice used the same cloud access key, or that an automation used a personal token. This lack of clarity makes it tougher to deal with problems. Action: Give each service and component its own workload identification. You might use cloud IAM roles that are linked to each microservice or a platform like SPIFFE to provide each workload its own identity, for example. You may find the source of every API call by using unique identifiers. This means getting rid of shared keys, using credentials that only last for a short time, and spreading identity context throughout your service mesh such that nothing works without proof.

- Bridging Signals and Setting Things Up Wrong

Permissive Access Drift (IAM): An internal analytics service starts viewing customer data that it doesn’t ordinarily read. Anomaly detection sees the sign: this service has never accessed these tables before. The main reason is that IAM policies are getting out of hand. Last month, someone might have quickly given it ReadAnyDatabase permissions, and the policy that let it stay in place stayed. Too many roles are a quiet risk that people add for convenience and then forget about. Find out which IAM policy this access problem is related to. Take back or limit the policy right away, and look for comparable flaws in other roles. From now on, treat changes to IAM like code. You should look over any modifications to the policy, and automated checks should find any wildcards or privileges that don’t work the way the service usually does. This policy-as-code method finds changes that are excessively lenient before they may be used.

Execution Context Gaps (Service Mesh): In a service mesh where there is no trust, every call should be authenticated. However, an inter-service call went through without a valid token. A security test demonstrates that Service A can utilize Service B’s API by directly accessing an IP address, which is not how it was designed to work. This may be a little sign. The problem is that the execution context for service mesh authorization is not the same. Service B may have thought that any call from inside the cluster was internal and trusted (based on network identification), but the mesh may have expected mTLS identity, which the direct IP call did not supply. People can get around authentication because of this gap. Action: Close the gap by building confidence at both the application and network layers. Make it so that all calls between services have to go through the mesh’s identity-aware proxy or be turned off. Set up mutual TLS in real life and make sure that no other network path can get to Service B’s port without the correct credentials. To make sure that your policies genuinely enforce context-aware authentication at every level, you should also attempt making requests in ways that aren’t meant to be made, including IP-based calls.

- Anticipating Short-Term Risks

Stolen Token Replay (Ephemeral workloads): A serverless function runs for two minutes, uses a storage bucket, and then stops as it should. Hours later, data show that the same function’s credentials were used from an IP address in a different country. This is a classic token reuse attack: the credentials for a temporary workload lasted longer than the task itself. The token’s life is longer than the workload’s life, and an attacker gained it, maybe via scraping memory or a log. The function didn’t run long enough for people to feel comfortable, but the token was still good long after it was over. Action: Make the token’s lifespan match how long it will actually be utilized. This will make sure that the risk and exposure are in balance. In practice, hand out dynamic, short-lived credentials that are based on the situation in which the function is running. For example, you could use cloud instance identification tokens that expire a few minutes after the function is done, or you could have the function cancel its token when it departs. You make it considerably tougher for an attacker to replay by making sure that tokens die when the workload does.

Orphaned Role Binding (temporary environments): A new Kubernetes cluster starts off with a default cloud role that is meant for development and testing, but it ends up being used in production. In the beginning, there is no alert. Someone who isn’t part of the cluster can list resources in the cloud environment weeks later using the pod credentials. A penetration test or breach report demonstrates that a privilege that shouldn’t be there is there. The root cause was a drift in the ephemeral setup that made it not work right. The cluster was built using an old template that had too much power. When temporary environments go away, they can take on old settings. When they come back, those mistakes are disregarded, but the next time someone uses that template, they happen again. Action: Handle infrastructure-as-code with the same level of care as you would application code. Add checks to new deployments to discover roles that have too many rights. For example, don’t use default credentials and only give the least amount of access necessary. Just because something is temporary doesn’t mean it doesn’t need to be looked at. Every temporary job should acquire the necessary permissions at the right time, and any activity that needs greater permissions should have a clear, time-limited elevation. You may avoid problems before they happen by include these criteria in your CI/CD and cloud automation.

- Feeding the Loop: Continuous Hardening

These complicated patterns depend on collecting feedback and always becoming better. Every issue or odd event that happens is a chance to improve the system. Did you notice that someone was using an API endpoint that you didn’t know about? Add automated API discovery scans to let the next one know before it is utilized. Do you see a one-time boost in privileges for a cloud account? Set up a guardrail or policy test to make sure that doesn’t happen again. This way of doing things one step at a time turns each error into a lesson. The default posture of the cloud platform gets stronger over time. Things that were unknown become known, and things that were set up wrong repair themselves. You may turn one-time fixes into long-lasting, automatic protections by applying what you learned from runtime in your design and code.

Important Advanced Securıty Patterns ın the Spotlıght

Let’s look at some sophisticated cloud and API security measures and how to cope with them in the real world:

What it means: Permissive-by-Default IAM Drift (Cloud IAM, RBAC rules) occurs when identity roles and policies obtain more rights over time, especially when defaults or quick fixes grant access that isn’t needed. It is “permissive by default” since access only grows until it is purposely cut back. Be careful of policies that have wildcard “” permissions or trust relationships that let any service take on roles. Roles that haven’t changed in years or service accounts that more than one program uses. An indicator is something strange that happens in access logs, such a low-privilege service suddenly completing high-privilege duties. This suggests it has hidden permissions. Action: Follow strict least privilege rules and check access on a regular basis. Use automation to detect rules that are too easy for people. For instance, make sure to point out any IAM position that gives admin or wide access to resources. If you find drift, repair it straight away and make sure that any new permissions you add are needed. IAM settings are like living code that is version-controlled and tested with unit tests. For instance, a new policy can’t be put into effect if it goes against privilege limits. This prohibits access rights from gradually growing and keeps the environment “secure by default” instead.

Token Lifespan vs. Exposure Risk Equilibrium (API Auth Tokens): What It Means: Finding a balance between how long credentials are valid and how likely they are to be stolen. Tokens that last a long time, like API keys and access tokens, make things easier, but they can also be more harmful if they get out. Ultra-short tokens, on the other hand, make breaches less dangerous, but they can also make things tougher to run if they’re not used well. From a security point of view, the sweet spot is when tokens last just long enough to be useful but not a second longer. Be careful with keys or tokens that never expire or have very far-off expiration dates. This suggests that security was less important than convenience. Also, watch out for teams that manually prolong token durations so they don’t have to refresh them as often. This is a hint that renewal processes need to be improved. On the other hand, watch out for failed integrations or authentication problems that happen when tokens expire too quickly. If developers are secretly putting long-lived credentials in place to get around short TTLs, you’ve gone too far. Action: Give out credentials that only last a short time and change automatically whenever you can. Use technologies that make it easy to renew, such OAuth with refresh tokens or dynamic secret managers like Vault. This way, individuals and services won’t notice the short lifetime very often. You can modify the TTLs depending on how often the token is used. A 10-minute TTL is enough if a token is generally used for 5 minutes. Put aggressive leak detection on top of this. If a token leaks, it will immediately become a threat because it doesn’t last long. Work toward a culture where no credential is valid for long, in line with zero-trust principles that say credentials will eventually leak and should not enable long-term access.

Execution Context Mismatch in Service Mesh Auth (Microservice Interactions) What It Means: When a service thinks it’s talking to someone else but is really talking to someone else, it’s because the context has been lost or skipped. Every service in a service mesh obtains credentials, like certificates and JWTs, that prove who it is. When such credentials aren’t used properly, like when a service allows requests on an internal port without confirming the caller’s mesh identity because “it’s internal thus it’s fine,” this breaks the chain of trust. Be careful of authentication or authorization checks that only happen at the edge, like at the API gateway, and not when services call each other. Any setup where a sidecar or service doesn’t check who it is getting things from. Technical telltales are requests that get through even if they are missing or have broken tokens, or manual exceptions in authentication policies (like “allow from 10.0.0.0/8” rules) that don’t check for identity. In intricate meshes, watch out for cases where user identity is passed along but service identity isn’t validated, or where mTLS is theoretically enabled but a misconfigured client can call directly, like when an ingress is misrouted. Action: Make sure that all communications between services verify the identity of both ends. No matter where the call comes from, each service should be able to show who they are and check who the other service is. Use mutual TLS in the mesh and turn off any connection that doesn’t handshake properly. Make sure that services at the application layer need and expect context. For instance, a service should need a JWT from upstream, even if upstream is on the same network. Make sure your mesh doesn’t have a shortcut that says “internal = trusted.” For example, if you use Kubernetes with Istio, you can use PeerAuthentication in STRICT mode and AuthorizationPolicies to make sure that even if someone gets a request into the network, it still needs a valid service identity to be honored. Check your mesh by running simulations on it from time to time. Try making calls using wrong or absent credentials and see whether they fail. When you fix context mismatches, it becomes tougher for attackers to get in when the system’s assumptions don’t line up between parts.

Credential Rebinding in Ephemeral Runtimes (Containers & Serverless): What It Means: When a system starts up in a temporary context, it usually needs keys, tokens, or certs to get to cloud APIs or databases. Credential rebinding happens when an attacker takes a credential that was meant for a short-lived instance and uses it again in a new situation. This gives something that was meant to be temporary a longer life or wider reach. For example, taking a token from a container that is going to be destroyed and using it somewhere else or after it was supposed to be used. Look for evidence that credentials for temporary instances are being used in ways they weren’t meant to be used. You might see API calls coming in after an instance has been terminated or from sources that don’t match the intended workload. For example, a token meant for a European datacenter workload might come in from an Asia Pacific IP. Also, watch out for hard-coded secrets in short-lived parts. For example, if you find a container image with an API key built in, it’s easy to copy that key and link it to something else. This problem is more likely to emerge in places where people come and go a lot and secrets are not managed well. Action: Fully embrace the use of temporary credentials. Every dynamic workload should get secrets that automatically expire or are cryptographically tied to that workload’s identity and lifetime. Some ways that don’t let trust be moved are one-time tokens, short-lived cloud instance profiles, and workload identity federation. For instance, use a metadata service or identity provider that gives out tokens that are linked to a specific function or container. The token will be denied if it is used somewhere else. Use continuous credential rotation for anything that has to last a long time. Find and take away credentials that will endure longer than the person who owns them. If an instance goes down, for example, its keys should be immediately invalidated. You can block attackers from using credentials over and over again by making sure that they can only be used in a few situations. This is a common way for cloud breaches to happen.

Shadow API Sprawl (Untracked Endpoints & Services) What It Means: The growth of APIs or services that aren’t officially documented, monitored, or safeguarded as well as mainstream APIs. When teams rush to set up microservices, leave trials running, or don’t properly retire existing functionalities, they often create shadow APIs. Because they don’t go through the normal checks and balances, such code review, security testing, or monitoring, any of these APIs could be a security flaw. Everyone might forget about them, except for an assailant who finds them. Be careful if your API inventory doesn’t match the traffic you get. For example, if you know you have 50 services but network logs show calls hitting endpoints that suggest 60 or 70 different API patterns, you might have shadow APIs. Security scans might return replies from endpoints that weren’t in your last audit. Internally, watch out for developers that create “temporary” APIs or go around the API gateway to get a quick fix. Most of the time, these become permanent shadows. Another sign is DNS names or subdomains that are not used or remembered but nevertheless point to live services. Action: Keep finding all APIs by shining a light on them. You can employ passive traffic analysis (which looks at load balancer or proxy logs to detect active endpoints) or active scanning (which checks recognized domains for undocumented API paths on a regular basis). Make an API catalog that gets updated as part of the deployment process. You shouldn’t start a service without first registering its endpoints. Encourage a culture in which teams treat APIs like products, with the right versioning and documentation. This will make it less likely that shadow APIs will show up. If you already have security measures in place, you might want to use specialized API security tools that can discover and profile unmanaged APIs. You may either add authentication, monitoring, rate limits, and other security features to your regular security program after you locate these shadow APIs, or you can get rid of them if you don’t need them. You close up backdoors and make sure that every interaction with the outside world is recognized and safe by stopping shadow spread.

Why It Matters: From puttıng out flames to makıng plans for the future

When you look at these deeper patterns, security goes from putting out fires to building preparations for defenses:

- Proactive Resilience: Fixing problems at their source can stop things like IAM drift or token misuse from becoming breaches. For instance, short-lived credentials are supposed to reduce damage, and regular detection makes sure there are fewer surprises. This proactive approach makes security a method to aid cloud innovation instead of getting in the way.

- Response accuracy: Security professionals can find out exactly what happened thanks to excellent pattern recognition. Instead of just mentioning that something went wrong, you can describe why it did. For example, “X caused a shadow API to be accessed; we’ve repaired it and set a policy to catch others.” This amount of detail makes stakeholders feel better and inhibits them from reacting quickly, which slows down development.

- Adaptive Learning: You can use these patterns to improve engineering and operations once you know what they are. For example, developers start to plan for these needs by making new services that let users receive temporary credentials immediately away or by adding IAM reviews to the release cycle. The whole firm learns more about the new cloud security language, which makes it harder for hackers to get in.

Last Thoughts: How to Get Around the New Attack Surface

Cloud and API-based apps change too quickly and too often for traditional security procedures to keep up. Basic uptime tests won’t tell you why a cloud program isn’t operating, and basic security measures won’t tell you why a breach could happen. The advanced patterns we talk about here are the tests that show how secure the cloud is. In this age of multi-cloud, microservices, and machine-to-machine connection, they show us just how things could go wrong. If you accept these principles, security will no longer be a set gate but a continual process of diagnosis. Based on what I’ve experienced, teams that really get these notions are not just safer, but also more adaptable. They can confidently adopt new technologies like serverless, service meshes, and multi-cloud strategies because they know how to cope with the risks that come with them. Cloud and API security are the new places where hackers can get in, but we can deal with them by looking at them more closely and using other methods. This will change what may be chaos into a system that we can control and improve all the time.